Serverless Data Processing with Dataflow: Develop Pipelines

About this Course

In this second installment of the Dataflow course series, we are going to be diving deeper on developing pipelines using the Beam SDK. We start with a review of Apache Beam concepts. Next, we discuss processing streaming data using windows, watermarks and triggers. We then cover options for sources and sinks in your pipelines, schemas to express your structured data, and how to do stateful transformations using State and Timer APIs. We move onto reviewing best practices that help maximize your pipeline performance. Towards the end of the course, we introduce SQL and Dataframes to represent your business logic in Beam and how to iteratively develop pipelines using Beam notebooks.Created by: Google Cloud

Related Online Courses

This comprehensive course will guide students through the process of building a complete web application using MongoDB, Express.js, AngularJS, and Node.js. In the first module, you will explore the... more

Welcome to the \"Tools and Techniques for Managing Stress\" course! This course offers valuable tools and techniques to effectively manage and cope with stress in both personal and professional... more

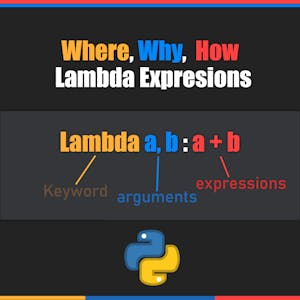

In this project we are going to learn about lambda expressions and it\'s application in python. We are going to start with what is Lambda expression and how we can define it, comparing lambda... more

You will learn how to design technologies that bring people joy, rather than frustration. You\'ll learn how to generate design ideas, techniques for quickly prototyping them, and how to use... more

Oracle Performance: Exadata, Enterprise Manager Mastery platform is designed to run Oracle Database workloads of any scale with very high performance, scalability, availability and security. Oracle... more